Reinforcement Learning in Machine Learning

In this article, we will cover one of the most important concepts of Machine Learning algorithms- Reinforcement Machine Learning. As you have noticed that this type of learning does have much application, therefore, it is amongst one of the important concepts of ML. Let’s start!!!

What is Reinforcement Learning in Machine Learning?

Reinforcement Learning is a type of feedback-based Machine learning technique in which we train an agent that learns to behave in an environment by performing the actions and observing the results of actions. For each accurate action, the agent gets positive feedback or rewards, and for each failed action, the agent gets negative feedback or a penalty for the same.

In Reinforcement Learning, the agent learns automatically on its own using the feedback without the help of any labeled data, unlike it does in supervised learning.

Since there is no need for the labeled data, the agent is bound to learn by its experience itself rather than depending on the supervision.

Reinforcement Learning tends to solve a particular type of problem where the pattern of decision making is sequential, and the goal to keep in consideration is long-term, such as game-playing, robotics, and so on.

The agent interacts with the environment and explores its features by observing it all by itself. The preliminary goal of an agent in reinforcement learning is to enhance performance by trying to get the maximum positive rewards through learning.

The agent in reinforcement learning learns with the principle of hit and trial, and on the basis of the experience, it learns to perform the task in an accurate way. As a result of this, we can say that Reinforcement learning is a type of machine learning method where an intelligent agent like a computer program or an AI model tends to interact with the environment and learns to act within the environment all on its own. As an example of RL, we can observe a Robotic dog that learns the movement of its arms.

Reinforcement Learning acts as a core part of the Artificial intelligence domain, and all AI agent trains as well as works on the concept of reinforcement learning. In such cases, we do not need to pre-program the smart agent, as it learns from its own experience of interaction with the environment without any human intervention.

Working Of ML Reinforcement Learning

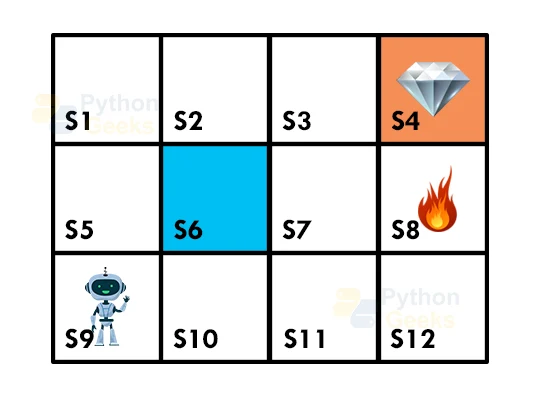

In order to understand the working of Reinforcement Learning, let us consider an example of a maze environment that the agent has to explore. Consider the below image to understand the maze the agent has to deal with:

In the above image, we can observe that the agent is present at the very first block of the maze. The maze comprises an S6 block, which is a wall, S8 depicts a fire pit and S4 depicts a diamond block.

The agent is not able to cross the S6 block, as it is a solid wall. If the agent is able to reach the S4 block, then it will get the +1 reward; if it reaches the fire pit or the forbidden region, then gets the -1 reward point. It is able to take four actions: move up, move down, move left, and move right.

The agent is able to take any path to reach the final point, but we require it to make it possible fewer steps. Suppose the agent considers the path marked as S9-S5-S1-S2-S3, so he would be able to get the +1-reward point.

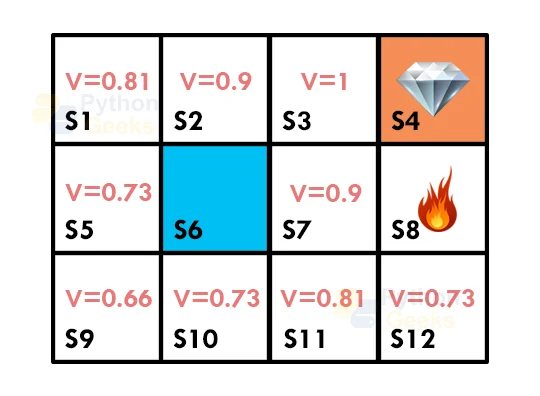

The agent will strive to remember the preceding steps that it takes to reach the final step. In order to memorize the steps, we program it to assign 1 value to each previous step.

It will become quite tiresome of a condition for the agent whether he should take the decision of going up or down as each block has the same value. As a consequence of this, the above approach is not suitable for the agent to reach the destination that we specify for it. Hence to solve the problem, we will are supposed to use the Bellman equation, which acts as the main concept behind reinforcement learning.

Terms Related to Reinforcement Machine Learning

Now that we are familiar with the working of Reinforcement Learning, we will try to understand some of the basic terms related to it.

1. Agent: An agent is an entity that can perceive/explore its environment and decides to act upon it accordingly.

2. Environment: An environment demonstrates a situation in which an agent is present or is surrounded by. In RL, we tend to assume that the stochastic environment, which indicates it is random in nature.

3. Action: Actions are the moves or activities that an agent takes within the environment.

4. State: State is a situation or state that the environment returns after each action that the agent takes.

5. Reward: It is the feedback that the agent receives from the environment to evaluate its action.

6. Policy: Policy is a strategy applied that the agent applies for the next action on the basis of the current state.

7. Value: It is expected long-term that the short-term reward returns with the discount factor and opposite to the short-term reward.

8. Q-value: It is almost similar to the value, but it takes one additional parameter as a current action of the agent.

Key Features of Reinforcement Machine Learning

1. In Reinforcement Learning, we do not instruct the agent about the environment and what actions it needs to take.

2. RL works on the principle of the hit and trial process.

3. The agent takes the next action and manipulates the states according to the feedback of the previous action.

4. The agent may receive a delayed reward many times.

5. The environment that the agent stays in is stochastic, and the agent needs to explore and analyze it to reach the maximum positive rewards.

Bellman Equation

The Bellman equation was introduced by the Mathematician Richard Ernest Bellman in the year 1953, and hence we can call it the Bellman equation. It associates itself with dynamic programming and is beneficial for calculating the values of a decision problem at a certain point by including the values of previous states.

It accounts for the way of calculating the value functions in dynamic programming or environment that leads to modern reinforcement learning.

The key elements used in Bellman equations are:

- Action that the agent performs is referred to as “a”

- State occurred by performing the action by the agent is “s.”

- The reward/feedback that the agent obtains for each good and bad action is “R.”

- A discount factor is depicted by Gamma “γ.”

After considering all the above factors, we can write the Bellman equation as

V(s) = max [R(s, a) + γV(s`)]

After all the calculations of the blocks, we land up with the path for the agent as

Markov Decision Process

Markov Decision Process or we can even refer to it as MDP, is beneficial to formalize the reinforcement learning problems. If the environment is completely observable for the agent, then we are able to model its dynamic as a Markov Process. In MDP, the agent constantly interacts with the environment that it stays in and performs actions; at each action, the environment tends to respond and generate a new state.

MDP is even beneficial for describing the environment for the RL, and almost all the RL problems are able to be formalized using MDP.

MDP comprises a tuple of four elements (S, A, Pa, Ra):

- A set of finite States S

- A set of finite Actions A

- Rewards received after transitioning from state S to state S’, due to action a.

- Probability Pa.

MDP makes use of the Markov property, and to better understand the MDP, we need to learn about it.

Markov Property

The property says that “If the agent is present in the current state S1, performs an action a1 and move to the state s2, then the state transition from s1 to s2 only depends on the current state and future action and states do not depend on past actions, rewards, or states.”

Markov Process

Markov Process is a memoryless process with a sequence of random states S1, S2, ….., St that makes use of the Markov Property. It is also known as Markov chain, which is a tuple (S, P) on state S and transition function P. These two components (S and P) are able to define the dynamics of the system.

Elements of Reinforcement Machine Learning

1. Policy: We can define policy as a way of how an agent behaves at any given time. It tends to map the perceived states of the environment in which the agent stays to the actions that the agent takes on those states. A policy acts as the core element of the RL algorithm as it alone can define the behavior of the agent.

In some situations, it may be just a simple function or a lookup table for instance, whereas, for other situations, it may involve general computation as a search process and increase the complexities. We could define the two types of policies, namely, deterministic and a stochastic policy as:

For deterministic policy: a = π(s)

For stochastic policy: π (a | s) = P [At =a | St = s]

2. Reward Signal: We can infer the goal of reinforcement learning by the reward signal. At each state, the environment tends to send an immediate signal to the learning agent, and this signal is what we call as a reward signal. We assign these rewards to the agents according to the accurate and failed actions of the agent. The agent aims to maximize the total number of rewards for good actions. The reward signal is capable of changing the policy, such as if an agent selects some action that leads to low reward, then the policy may change in an attempt to select other actions in the future for better rewards.

3. Value Function: The value function gives a vital summary of how good the situation and action are and how much reward an agent can expect from the actions. While a reward denotes the immediate signal for each good and bad action, a value function specifies the good state and action for the future on the basis of the reward it gets. The value function is dependent on the reward since, without reward, there could be no value of the actions. The goal of estimating values is to achieve more rewards for a smaller number of actions.

4. Model: The fourth element of reinforcement learning is the model, which replicates the behavior of the environment. With the assistance of the model, we are able to make inferences about how the environment will behave. As an example, if we come across a state and an action, then a model can predict the next state and reward on the basis of the current state and reward.

Benefits Of Reinforcement Learning

1. Focuses more on the problem as a whole:

Conventional machine learning algorithms are designed to excel at specific subtasks, without a notion of the big picture. RL, on the other hand, doesn’t divide the problem into subproblems; it directly works to maximize the long-term reward.

2. Does not require a separate data collection step:

In RL, training data is obtained via the direct interaction of the agent with the environment. Training data is the learning agent’s experience, not a separate collection of data that has to be fed to the algorithm.

3. Able to work in a dynamic uncertain environment:

RL algorithms are inherently adaptive and built to respond to changes in the environment. In RL, time matters and the experience that the agent collects is not independently and identically distributed

Approaches to Implement Reinforcement Machine Learning

There are mainly three approaches through which we can apply any Reinforcement Learning

1. Value-based:

The value-based approach is all about finding the optimal value function, which accounts for the maximum value at a state under any policy. As a result of this, the agent anticipates the long-term return at any state under policy π.

2. Policy-based:

Policy-based approach aims at finding the optimal policy for the maximum possible future rewards without the use of the value function. In this approach, the agent tries to employ such a policy that the action it performs in each step helps the agent to maximize the future reward.

The policy-based approach has mainly two types of policies associated to it:

3. Deterministic:

Produces the same action by the policy (π) at any state.

4. Stochastic:

In this policy, probability determines the produced action of the agent.

5. Model-based:

In the model-based approach, we create a virtual model for the environment, and the agent explores that environment to learn and gain rewards from it. There is no definite solution or algorithm for this approach since the model representation is different for each environment that the agent encounters.

Challenges Before Reinforcement Learning

- We need to design the reward-based functions properly.

- Unspecified reward functions may tend to be too risk-sensitive and objective to particular rewards.

- Limited samples to learn are a huge obstacle as these would result in the model producing inadequate results and increase the number of penalties.

- Overloading of states is never a good indication as it may drastically impact the results of the model. This happens when we perform too much RL on a problem.

Widely Used Algorithms for Reinforcement Machine Learning

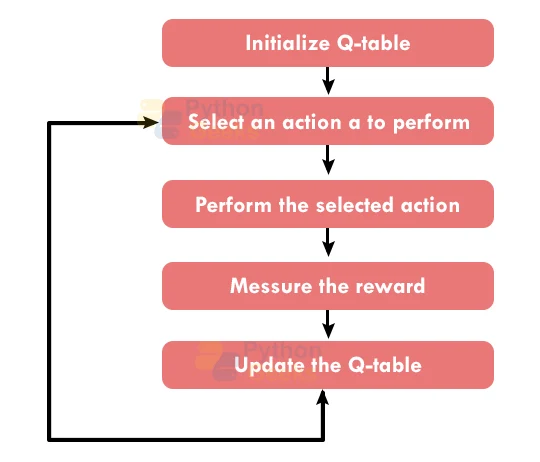

1. Q-Learning

Q-learning is an off-policy technique, model-free Reinforcement Learning algorithm. We consider it as off-policy because the algorithm learns from random actions, unlike other algorithms.

For additional information, Q here denotes the Quality, which basically indicates the quality of the action that maximizes the reward that the agent receives for its actions.

When we program the model using this algorithm, we construct a reward matrix for the rewards of the agents that store rewards at specific moves. For example, if the reward for any action is 100, then it will store this value in the matrix at the position where it got 100.

2. SARSA

SARSA is the acronym for State-Action-Reward-State-Action, and the algorithm has various commonalities with the Q-learning approach. However, the difference lies in the fact that it is an on-policy method, unlike Q-learning, which is an off-policy algorithm.

3. Deep Q-Network

Deep Q-Network makes use of a neural network instead of a two-dimensional array for storing the rewards. The reason for this is, Q-learning agents and methods are unable to estimate and update values for the states that they do not know about through the matrix.

To put it in simple words, if there is a completely new and unknown state that the agent encounters, normal Q-learning will not be able to estimate the value of the reward. Apart from this, Q-learning follows a dynamic programming approach using the 2-D arrays which helps in accurate reward distribution.

Types of Reinforcement Machine Learning

We can divide Reinforcement Learning into two types. They are

1. Positive Reinforcement:

Positive reinforcement learning indicates the addition of something to increase the tendency that certain expected behavior would occur repetitively. It impacts positively on the behavior of the agent and enhances the strength of the behavior of the agent.

This type of reinforcement can sustain the changes in the reward pattern for a long time, however, too much positive reinforcement may cause an overload of states that can reduce the consequences of the model.

2. Negative Reinforcement:

Negative reinforcement learning is completely opposite to positive reinforcement as it strengthens the tendency that the specific behavior will occur repetitively by avoiding the negative condition.

It can prove to be more effective than positive reinforcement depending on the situation and behavior of the agent, however, it provides reinforcement only to meet the minimum behavior required for the agent.

Advantages of Reinforcement Learning

1. It is able to solve higher-order and complex problems. Apart from this, the solutions that we obtain will be very precise.

2. The reason for its precision is that it replicates the human learning technique.

3. This model will undergo a rigorous training process that results in delays in training, however, this can ensure the correction of any errors.

4. Because of its learning ability, we can use it with neural networks. Collectively, we can term this as deep reinforcement learning.

Disadvantages of Reinforcement Learning

1. The use of reinforcement learning models for solving simpler problems may not result in accurate predictions. The reason is, the models extensively tackle complex problems.

2. We will tend to waste unnecessary processing power and space by using it for simpler problems instead of complex ones.

3. We need huge sums of data to feed the model for computation. Reinforcement Learning models require a lot of training data resources and time to develop accurate results.

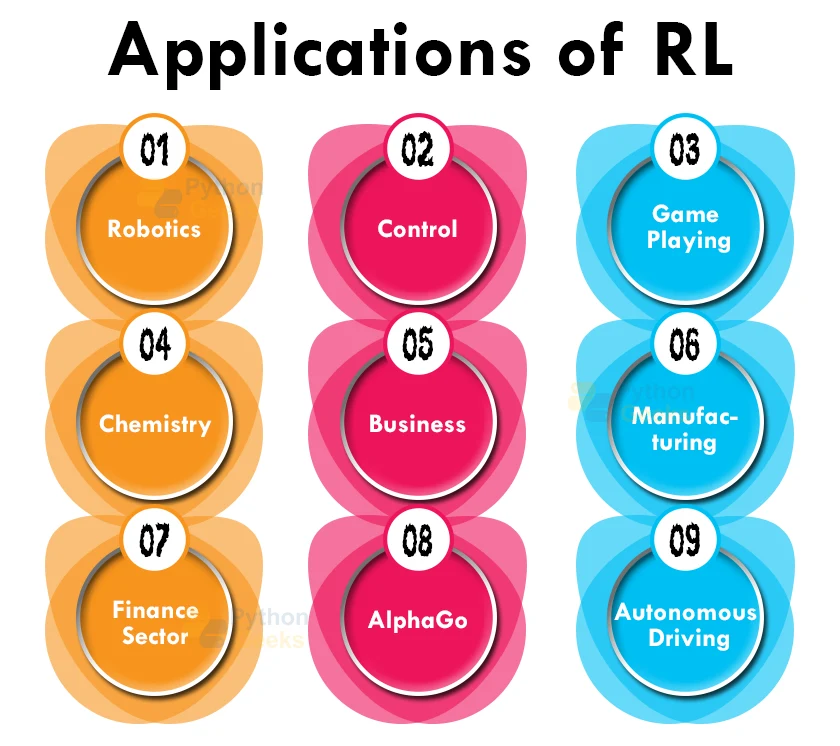

Applications of Reinforcement Learning in Machine Learning

1. Robotics:

Reinforcement Learning extensively works for Robot navigation, Robo-soccer, walking, juggling, and so on for its precisions and rewarding mechanism. In the real world, where the response of the environment to the behavior of the robot is uncertain, pre-programming accurate actions is nearly impossible.

In such scenarios, RL provides an efficient way to build general-purpose robots. It has been successfully applied to robotic path planning, where a robot must find a short, smooth, and navigable path between two locations, void of collisions and compatible with the dynamics of the robot.

2. Control:

We can make use of Reinforcement Learning for adaptive control such as Factory processes, admission control in telecommunication, and Helicopter pilot.

3. Game Playing:

We can efficiently make use of RL in Game playing such as tic-tac-toe, chess, and other games dealing with moves and sequential orders.

4. Chemistry:

We can make use of RL for optimizing chemical reactions.

5. Business:

We can make use of RL for business strategy planning.

6. Manufacturing:

In numerous automobile manufacturing companies, the robots make use of deep reinforcement learning to look up goods and put them in some containers.

7. Finance Sector:

Many companies make use of RL in the finance sector for evaluating trading strategies.

8. AlphaGo:

One of the most complex strategic games is a 3,000-year-old Chinese board game called Go. Its complexity stems from the fact that there are 10^270 possible board combinations, several orders of magnitude more than the game of chess. In 2016, an RL-based Go agent called AlphaGo defeated the greatest human Go player.

9. Autonomous Driving:

An autonomous driving system must perform multiple perception and planning tasks in an uncertain environment. Some specific tasks where RL finds application include vehicle path planning and motion prediction. Vehicle path planning requires several low and high-level policies to make decisions over varying temporal and spatial scales.

Difference Between Supervised and Reinforcement Learning

| Reinforcement Learning | Supervised Learning |

| RL works by interacting with the environment. | Supervised learning works on the existing dataset. |

| The RL algorithm replicates the human brain’s functioning when making some decisions. | Supervised Learning works as when a human learns things under the supervision of a guide. |

| There is no labeled dataset is present | The labeled dataset is present. |

| We do not need to provide any previous training to the learning agent. | We provide training to the algorithm so that it can predict the output. |

| RL helps to make decisions sequentially. | Supervised learning makes decisions when we give input. |

Distinguishing Factors Between Machine Learning, Deep Learning, and Reinforcement Learning

Machine Learning

Machine Learning is a subset of AI in which we provide computers with the ability to progressively enhance the performance of a specific task with data, without being directly programmed ( as defined by Arthur Lee Samuel’s definition). He coined the term “machine learning”, of which there exist two types, supervised and unsupervised machine learning.

Deep Learning

It comprises several layers of neural networks, that are carefully designed to perform more sophisticated tasks. The construction of deep learning models takes its inspiration from the design of the human brain but is simplified. Deep learning models comprise a few neural network layers which are responsible for gradually learning more abstract features about particular data.

Although deep learning solutions are capable of providing marvelous results, in terms of scale they are no match for the human brain working capabilities.

Reinforcement Learning

As we have stated above, RL tends to employ a system of rewards and penalties to compel the computer to solve a problem by itself. Human involvement is quite limited to changing the environment and tweaking the system of rewards and penalties. As the computer attempts to maximize the reward, it is prone to seeking unexpected ways of doing it. Human involvement mainly focuses on preventing it from exploiting the system and motivating the machine to perform the task in the way expected by the programmer.

Future of Reinforcement Learning

In recent years, significant progress has been made in the arena of deep reinforcement learning. Deep reinforcement learning makes use of deep neural networks to model the value function (value-based) or the agent’s policy (policy-based) or both (actor-critic). Before the widespread success of deep neural networks, complex features had to be engineered to train an RL algorithm. This indicates reduced learning capacity, limiting the scope of RL to simple environments.

With deep learning, we are able to build models using millions of trainable weights, freeing the user from tedious feature engineering. Relevant features are fabricated automatically during the training process, enabling the agent to learn optimal policies in complex environments.

Conventionally, we are able to apply RL to one task at a time. Each task learns by a separate RL agent, and these agents do not share knowledge with each other. This makes learning complex behaviors, such as driving a car, inefficient and slow. Problems that share a common information source, have related underlying structure, and are interdependent can get a huge performance boost by allowing multiple agents to work together.

Conclusion

With that, we have reached the end of this article that talked in brief about the basics of Reinforcement Learning. Through this article, we realized that even though Reinforcement Learning does not have much real-life application, it still plays a pivotal role in the arena of Machine Learning. Through this article, we came to know about the advantages, disadvantages, as well as applications of Reinforcement Learning. Hope that this article from PythonGeeks was able to solve all your queries related to the concept of Reinforcement Learning.