Recurrent Neural Network – RNN in Machine Learning

We offer you a brighter future with FREE online courses - Start Now!!

As of now, you’ve seen CNN and how it helps Machine Learning models to identify, classify and process the input images. However, we know that Machine Learning is capable of doing much more than just dealing with the images. We are training models in such a way that they replicate human-like decision-making behaviors.

Before we proceed further in understanding other complex algorithms apart from CNN, let me ask you a simple question. “Learning you do Machine like?” Don’t scratch your head in confusion, I know it’s too difficult to decipher the above question. Let me rephrase it for you. “Do you like Machine Learning?” Now it makes sense, right?

Now consider the situation where you have to build a Machine Learning Model that has to process the above statement. How are you going to train the model in such a way that it’d be able to process the mismatched sentence? How are search engines able to give near to perfect results even when your query is sequentially incorrect?

Well, PythonGeeks is here to help you with the answer- RNN. In this article, PythonGeeks will guide you through the RNN algorithm, its working, and applications. Here, the article assumes that you are aware of the basics of neural networks. Therefore, let’s begin the article to learn about RNN.

Introduction to RNN

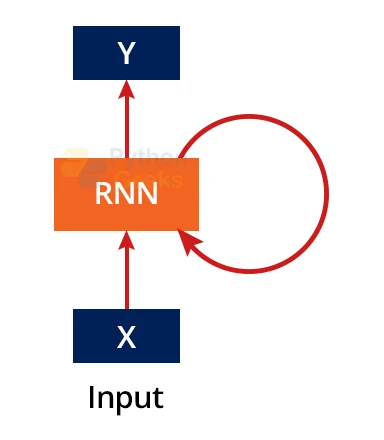

In technical words, Recurrent Neural Network (Full form of RNN) is a kind of Neural Network in which there is a connection between nodes along with the flow of the data which is in the form of a directed graph. In other words, RNN deals with sequences of the data. It works on the principle of preserving the output of a certain layer and providing it again as the input in an attempt to predict the further sequence. Let’s understand the definition a little better with the help of an example.

Example:

Let us consider your messaging application. Every time you try to type a sentence through the keyboard, the application tends to predict the next word that you are about to type. The core idea behind this prediction is the sequence of words that we type before. Recurrent Neural Network tends to store the previously typed words and feeds this sequence to the algorithm in order to predict the next word in the sequence.

We use the same analogy in other fields like stock prediction, sensor reading, medical records, and others. In each case, the recurrent neural network makes use of its internal layer to store the sequence of data which then leads to a connection between the output sequences. Because of this, RNN is primarily used in Natural Language Processing (NLP), Speech Recognition, and Language Translation.

Why prefer RNN over other Algorithms?

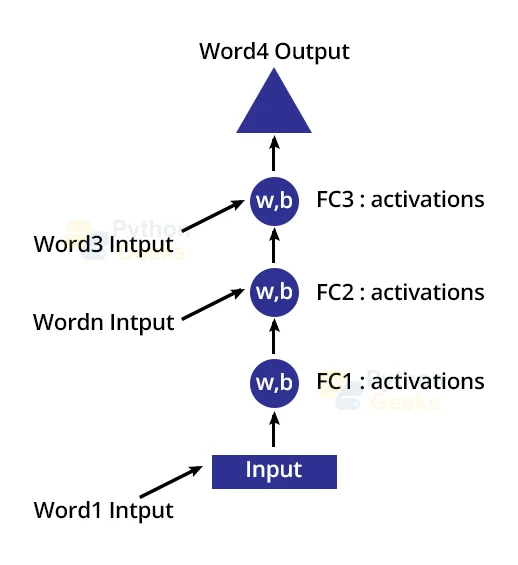

Let us go back to the example of word prediction. Let us try to predict the next word in the sentence using MLP. In its most basic form, MLP has an input layer, hidden layers and an output layer. The typed words are fed in the input layer and then we send them to the hidden layers for further processing.

At the first hidden layer, the activation is applied on the input and it is then sent to the subsequent layers. But do these layers have any connections in common? No. These layers are independent entities in themselves and hence they are not able to form a meaningful connection between these layers.

As we have learned earlier, RNN tends to feed the output of one layer to the other in order to deal with the sequential data. This makes RNN a powerful tool over other algorithms. In simple terms, RNN is better than other neural networks because:

- RNN can efficiently handle sequential data while other algorithms are insufficient.

- Other algorithms only consider the current input for prediction while RNN has special storage of previously used inputs for accurate prediction results.

- Other algorithms are incapable of memorizing the previous inputs.

Thus, RNN is a better option for Sequential Data Processing.

Working of the RNN Algorithm

Suppose we have a model with one input layer, an output layer, and three hidden layers in between. The recurrent neural network tends to process the data at the input layer and then sends the data to the first hidden layer. In other neural networks, the hidden layers are independent of each other, meaning they have their own weights and biases and are not interconnected with each other.

Let the weights and biases of these layers be (w1, b1), (w2, b2) and (w3, b3) respectively. These layers perform individual activations on the data and thus we cannot club together with their results.

As the name suggests, Recurrent Neural Network is a recursive algorithm, and hence it tends to form a loop while passing the data from one layer to another. In order to do so, the algorithm tends to equalize the weights and biases of all the hidden layers. Once the weights and biases are similar, the layers are combined to form one single entity where data is fed recursively. This helps the algorithm to deal with sequences of the data.

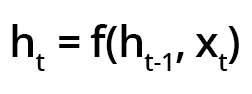

The above-demonstrated RNN block tends to apply a recurrence formula on the input vector as well as the previous state. So, at any given time “t”, if the newly formed state is demonstrated as ht and the current input as xt, then the recurrence formula is represented as

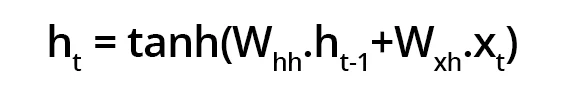

Considering the simplest situation, the activation functions for neural networks are tanh. Take the weight of the recurrent neutron as Whh and weight at input neutron as Wxh, so at any given time “t”, with xt as current input, the current state ht is given with the formula

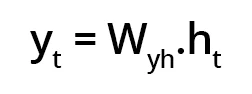

Now once the data is activated at all the layers, we calculate the output state with the formula

Training Of RNN

- Provide input to the network as a single time-step.

- Calculate the current state with the help of the current input and the previous state.

- The current state output ht now shifts to ht-1 for the next step.

- At the end of all the hidden layers, you can integrate the output generated at each step.

- Calculate the error by considering the difference between the predicted and actual output.

- Backpropogate the error to adjust the weights and attain better results.

Types of RNN

Now that we are familiar with the basic structure as well as the working of RNN, let us take a quick glance at the different types of RNN that help with the dealing of Sequential Data. There are primarily 4 types of RNN as below:

1. One to One: This is the most basic type of RNN that deals with generalized Machine Learning problems. It is also known by the name Vanilla Neural Network for the simplicity of its algorithm. The architecture of this type of RNN comprises a single input as well as a single output layer.

2. One to Many: This type of RNN generally deals with image captioning problems. The architecture of this RNN comprises single input with multiple outputs.

3. Many to One: This type of RNN deals with problems involving single output for a combination of various inputs. It proves to be beneficial in the case of Sentiment Analysis where we have to classify the given statements as negative or positive based on the criteria we feed.

4. Many to Many: This is a much more complex type of RNN as compared to the other types. Its architecture comprises a combination of several outputs for a given sequence of inputs. This makes the network beneficial for problems involving Machine Translation.

Challenges Faced by RNN

1. Vanishing Gradient Problem: RNN deals with the gradient problem with quite difficult to interpret results. The main cause behind this is the difficulty in training the model. When the gradient that carries the information is too small, the effect of results from the RNN becomes quite insignificant. This makes the dealing of large datasets quite tiresome.

2. Exploding Gradient Problem: This type of problem occurs when the rate at which the slope grows exponentially in place of decay. This issue occurs when a huge load of gradients accumulates and cause a significantly larger change in the output.

LSTM and its working

As seen in the previous section, RNN has dealt with a variety of gradient problems. In order to overcome them, developers came up with a reliable solution- Long Short Term Memory.

The main function of the LSTM is to remember large data sets for a considerable period of time. It is a special type of RNN with a chained structure of multiple RNN consisting of a simple tanh or other significant function layers. These layers are made to interact with the other layers in an extraordinary manner.

The working of LSTM is really quick and efficient. We will look at this process in quite a simple way:

- The first step in the process involves determining the amount of data that we have to retain for future processing. This means that the algorithm has to erase all the redundant data beforehand. In order to do so, the network makes use of the sigmoid function that considers both the previous state as well as the current input for determining the state of the function.

- The second stage of the algorithm is divided into two parts- the sigmoid function and the tanh function. The sigmoid function allows the passage of the functions based on the values obtained from the previous stage. Tanh functions tend to add weights to the functions that are approved by the sigmoid function.

- The final stage of the process deals with the selection of the state of the output. First, the sigmoid function chooses the gate through which the output is about to pass. Then, the output is multiplied with the tanh function for accuracy.

Applications of RNN

The most interesting part about learning any algorithm is finding out about the real-life application of the algorithm. Let us take a look at all the domains in which RNN plays a crucial role.

1. Translations

The most common and widely used application of RNN is translation of one language to another. Since each language has its own semantics and vocabulary, the translated sentence may have varying lengths and types as compared to the original statement. Since other algorithms are incapable of handling previous inputs, they cannot handle varying outputs. In this case, RNN helps us with better and accurate translations.

2. Sentiment Analysis

Sentiment Analysis deals with the classification of text on the basis of the emotions they convey. RNN is deployed in major social media communities to filter out texts as positives or negatives. This helps the tech giants in providing a safe environment to its users by obstructing negative comments. This in turn ensures a better user experience.

3. Image Captioning

When deployed in integration with the CNNs, RNN is a very useful tool for writing text on the basis of the images. CNN models tend to identify the image and provide an acute description of the image. RNN uses this description to form an expressive portrayal of the image.

4. Speech Recognition

Earlier tech giants used Hidden Markov Models for recognizing speech. Since the evolution of neural networks, RNN is by far the most accurate alternative for speech recognition. In conjunction with the LSTMs, we can use RNN to accurately classify speeches and convert them into texts without losing any context.

5. Series Prediction

RNN is really beneficial when we have to deal with problems involving sequential data. This feature makes RNN a key factor in the time series prediction. It gives accurate and reliable results for stock price prediction and many such problems.

Conclusion

Concluding the article on Recurrent Neural Network, we came across the basic requirements of RNN. We also came to know how RNN is better equipped than other algorithms in handling Sequential data. Now you even know the working of the RNN model. Knowing the current applications and the basics will certainly help in developing better models for advancements of this domain.

THANK YOU,

VERY VERY USEFUL MY RESERCH WORK