Python OpenCV Human Activity Recognition – Decode Human Actions

We offer you a brighter future with FREE online courses - Start Now!!

Human activity recognition is a way to teach machines to automatically understand and identify human activities using sensor data. It has many practical uses in healthcare, sports, and security systems. The main idea behind HAR is to improve our understanding of human behaviour, enhance our quality of life, and prevent accidents or injuries. With new technologies like wearables, smartphones, and IoT, we have more data than ever to train machines to recognize human activities.

Machine learning, especially deep learning, has proven to be highly effective in identifying key features from sensor data and accurately classifying human activities. Data science and machine learning are rapidly growing fields with the potential to revolutionize many areas of our lives, making them exciting areas of research for experts in the field. It’s important to produce original content and avoid plagiarism.

Background

Human activity recognition is a computer technology that identifies and categorizes human activities from sensor or video data. It has many practical applications, such as monitoring health and analyzing sports performance. We train a computer model by providing it with examples of different activities and labelling them. Challenges exist, but new deep learning techniques have improved the accuracy and reliability of models. This technology is important now and will continue to grow in importance as we use more wearable sensors and smart devices in our everyday lives.

Techniques

Human activity recognition (HAR) is the task of figuring out what activity a person is doing based on data from sensors like accelerometers and gyroscopes. There are different machine learning techniques that can be used to solve this problem, such as Support Vector Machines (SVMs), Random Forests, Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Hidden Markov Models (HMMs), and Long Short-Term Memory (LSTM) Networks. Every technique has its own strengths and weaknesses, and the optimal choice depends on the particular situation. To find the best technique, it is often necessary to try different approaches and see what works best.

Human Activity Recognition Dataset

The human activity recognition dataset is a set of pictures with labels used to train and test models that recognize what people are doing in the pictures. There are two CSV files with information about the pictures and their labels. This dataset is used in many applications, like security cameras, healthcare, and sports. By recognizing human activities, these systems can help keep people safe, work better, and improve performance.

What is TensorFlow?

TensorFlow is a software library created by Google that makes it easier to build and use machine learning models. It can be used to build models that can recognize images, understand natural language, and make predictions. TensorFlow works with many different types of computers and programming languages, so it can be used by a wide variety of people. It is very popular and has a big community of people who work together to improve it and help others use it.

What is Keras Library?

Keras is a Python library that offers a high-level interface to create and train deep learning models with the TensorFlow machine learning library. It simplifies the process of building neural networks by providing a user-friendly and modular API, allowing for rapid experimentation and prototyping. Keras supports a wide range of neural network architectures and is highly customizable. This technology is utilized for a variety of applications, such as computer vision, natural language processing, and speech recognition.

How are human activities predicted by a model?

Your human activity recognition model can predict the calling, texting activity on new data by using a process called classification. The model is trained on a dataset that includes examples of different activities, like calling, laughing, texting, etc. By learning patterns and features from these examples, the model can recognize similar activities in new data.

For example, if the model sees patterns in the new data that match the calling activity, it will predict that the activity is calling or if the model sees patterns in the new data that match the texting activity, it will predict that the activity is texting. With enough examples and a good model design, the model can accurately predict calling, texting and other activities with high accuracy.

Prerequisites for Human Activity Recognition Using Python OpenCV

Having a thorough comprehension of the Python programming language and the OpenCV library is crucial. Additionally, meeting the following system requirements is essential.

1. Python 3.7 and above

2. Google Colab

Download Python OpenCV Human Activity Recognition Project

Please download the source code of Python OpenCV Human Activity Recognition Project from the following link: Python OpenCV Human Activity Recognition Project Code.

Why Google Colab?

Google Colab is an online platform where you can write and run Python code. It gives you access to powerful computers in the cloud that have lots of memory and fast processors, so you can work with large datasets and train machine learning models quickly. There is no need to install any software on your personal computer, and it’s effortless to share your work with others when using this method. Colab comes with many pre-installed libraries that are commonly used in machine learning, so you can get started right away. If your computer has 4-8 GB dedicated Graphics, you can go with it. Suggested – Google Colab

Let’s Implement It

First of all, change the Google Colab runtime to GPU from the Runtime option available in the menu section.

1. To start, we are importing all the necessary libraries required for the implementation.

from matplotlib import pyplot as plt from matplotlib import image as img import os import random from PIL import Image import sys from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import train_test_split from sklearn.preprocessing import LabelBinarizer import pandas as pd import numpy as np from tensorflow.keras.utils import to_categorical import tensorflow as tf from keras import layers from keras.models import Sequential from keras.layers import Conv2D,MaxPooling2D,Activation, Dropout, Flatten, Dense from keras.preprocessing.image import ImageDataGenerator

2. These lines of code set the environment variables ‘KAGGLE_USERNAME’ and ‘KAGGLE_KEY’ to your Kaggle account’s username and API key, respectively.

import os os.environ['KAGGLE_USERNAME'] = "yogeshkhandare56" os.environ['KAGGLE_KEY'] = "7788f9b761a1a8f81219c7927e26a42c"

You will get your username and key from your Kaggle account.

3. This is the API command of the dataset required to download the dataset. It is in zip format.

!kaggle datasets download -d meetnagadia/human-action-recognition-har-dataset

Importance of steps 2-3:- Instead of manually downloading a dataset from Kaggle, users can use the Kaggle API command to directly download the dataset to Google Colab. This method saves time and reduces network usage, as users do not have to manually download and upload the dataset. Additionally, it ensures that the data is up-to-date and reduces the risk of errors that may occur during the manual download and upload process.

4. This line of code unzips the data.

!unzip human-action-recognition-har-dataset.zip

5. This code loads a file named ‘Training_set.csv’ from a folder named ‘Human Action Recognition’ and saves its data in a format that allows it to be easily analyzed and manipulated using Python.

data = pd.read_csv('/content/Human Action Recognition/Training_set.csv')

6. The following code will count the occurrences of each unique value in the ‘label’ column of the ‘data’ table, and save the results as a new object called ‘counts’. The output shows the count of each unique value, which can help to understand how the data is distributed and if there are any class imbalances.

counts = data['label'].value_counts() counts

7. This code creates a function called ‘chooserandom’ that randomly selects and displays ‘n’ images from a dataset stored in a directory. It uses Matplotlib library to display the images and checks if the file exists before displaying it. It can help in visually inspecting the data and verifying that the image and its label are correctly aligned.

def chooserandom(n=1):

plt.figure(figsize=(30,30))

for i in range(n):

rnd = random.randint(0,len(data)-1)

img_file = '/content/Human Action Recognition/train/' + data['filename'][rnd]

if os.path.exists(img_file):

plt.subplot(n//2+1, 2, i + 1)

image = img.imread(img_file)

plt.imshow(image)

plt.title(data['label'][rnd])

8. This will display six random images from the training dataset.

chooserandom(6)

9. This code converts the categorical values in the ‘label’ column of the ‘data’ DataFrame into a binary format and retrieves the unique classes present in the label column. The binary encoded labels are stored in ‘y’ variable, and the unique classes are printed to the console. This is useful for preparing the data for machine learning algorithms.

encode = LabelBinarizer() y = encode.fit_transform(data['label']) classes = encode.classes_ print(classes)

Output of this step:-

10. This code retrieves the values in the ‘filename’ column of the ‘data’ DataFrame and stores them in a new variable ‘x’.

x = data['filename'].values

11. This code splits the data into training and testing sets for use in machine learning models.

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.1, random_state=100)

12. This code reads in the image data from the ‘x_train’ variable, resizes it to a size of (160, 160) pixels, and saves it as a list of NumPy arrays. This is useful for preprocessing the image data to ensure a consistent size, which can improve the performance of machine learning models.

img_data = []

size = len(x_train)

for i in range(size):

image = Image.open('/content/Human Action Recognition/train/' + x_train[i])

img_data.append(np.asarray(image.resize((160,160))))

13. This code creates a model for image classification using a pre-trained VGG16 model and additional layers in Keras/TensorFlow. The pre-trained model’s layers are frozen, and the model includes a Flatten layer, two Dense layers with activation functions. This model can be used to classify images and make predictions about new images.

model = Sequential()

pretrained_model= tf.keras.applications.VGG16(include_top=False,

input_shape=(160,160,3),

pooling='avg',classes=15,

weights='imagenet')

for layer in pretrained_model.layers:

layer.trainable=False

model.add(pretrained_model)

model.add(Flatten())

model.add(Dense(512, activation='relu'))

model.add(Dense(15, activation='softmax'))

14. This code sets the optimizer, loss function, and evaluation metric for the previously defined Keras model. It compiles the model and generates a summary of its architecture, including the number of parameters and output shapes of each layer. This summary can be useful for understanding the structure of the model and debugging potential issues.

model.compile(optimizer='adam', loss='categorical_crossentropy',metrics=['accuracy']) model.summary()

Output of this step

15. This code trains the Keras model using preprocessed image data and corresponding labels as input for 60 epochs. The resulting ‘history’ variable contains information about the training process, such as the loss and accuracy values for each epoch, which can be used to evaluate the performance of the model.

history = model.fit(np.asarray(img_data), y_train, epochs=60)

Output of this step

16. This function reads the image and resizes it (160,160) that is required by the model.

def imread(fn):

image = Image.open(fn)

return np.asarray(image.resize((160,160)))

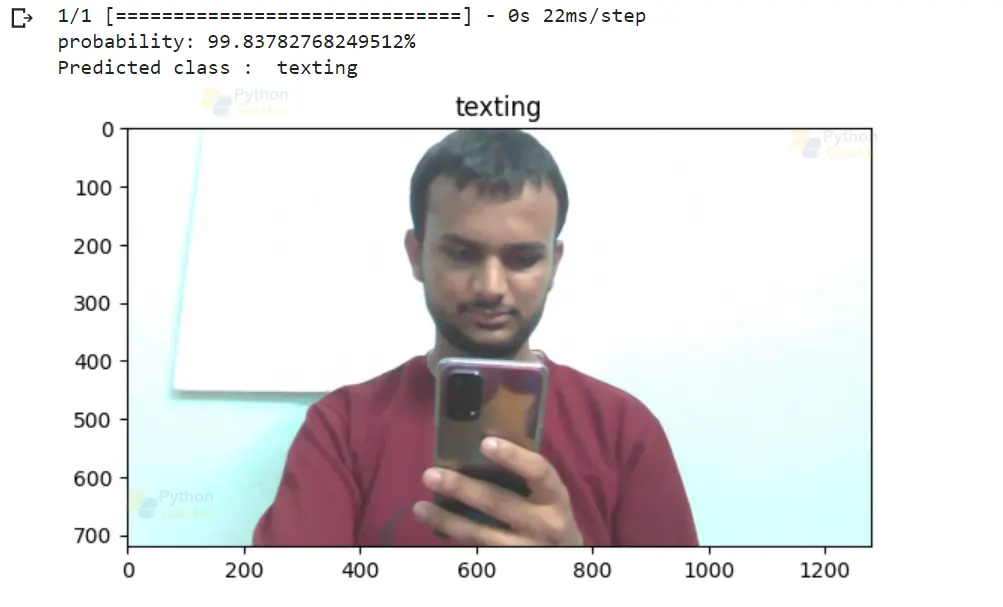

17. This is a function written in Python that recognizes objects in an image using the trained model. It takes the path of the test image as input, reads the image, and passes it to the model for prediction. The output prediction is then displayed as a human-readable class name with the probability of the prediction. Finally, the original test image is displayed with the predicted class name as the title. This function is useful for identifying objects in real-world scenarios.

def recognize(test_image):

result = model.predict(np.asarray([imread(test_image)]))

itemindex = np.where(result==np.max(result))

prediction = classes[itemindex[1][0]]

print("probability: "+str(np.max(result)*100) + "%\nPredicted class : ", prediction)

image = img.imread(test_image)

plt.imshow(image)

plt.title(prediction)

18. Calling the function recognize for activity recognizing.

recognize('/content/image.jpg')

Python OpenCV Human Activity Recognition Output

Model Accuracy

We will evaluate our training model on test data to get the accuracy of it. Write the below line to get it .

img_test= []

size = len(x_test)

for i in tqdm(range(size)):

image = Image.open('/content/Human Action Recognition/train/' + x_test[i])

img_test.append(np.asarray(image.resize((160,160))))

Acc = model.evaluate(np.asarray(img_test), y_test)*100

print(f"Accuracy: {Acc [1]}")

Output

40/40 [==============================] – 2s 33ms/step – loss: 2.6720 – accuracy: 0.8527

Accuracy: 85.26984238624573

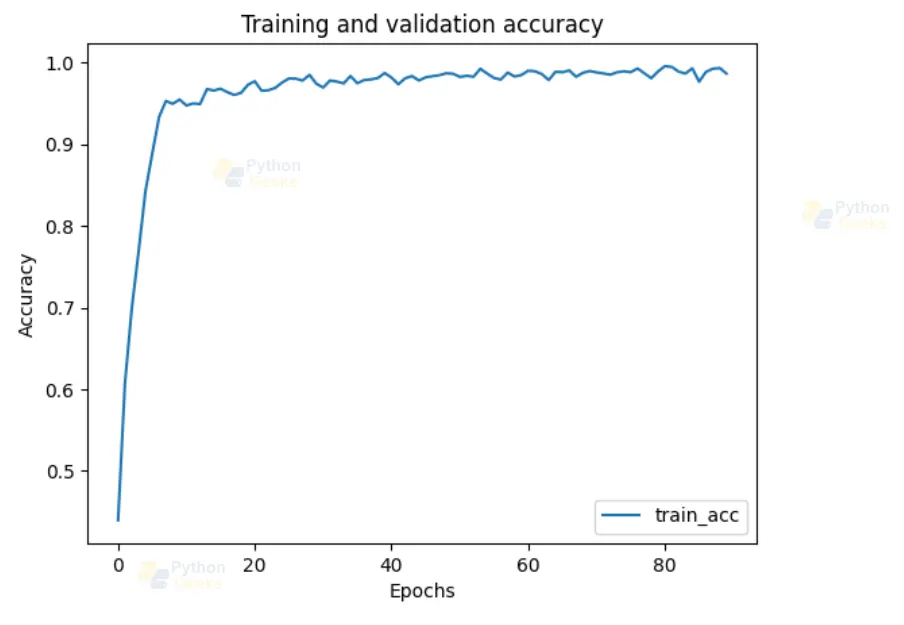

Graphs

We will visualize the data using matplotlib library

plt.bar(counts.index, counts.values)

plt.title('Distribution of classes in the training data')

plt.xlabel('Classes')

plt.ylabel('Count')

plt.xticks(rotation=45) # rotate the x-axis labels by 45 degrees

plt.show()

plt.plot(history.history['loss'], label='train_loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

plt.plot(history.history['accuracy'], label='train_acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

Conclusion

Human activity recognition using OpenCV and deep learning is a promising method for accurately detecting and classifying human activities in real-time. Deep learning models like CNNs and RNNs capture spatiotemporal features of human activities and achieve high accuracy in classification tasks. OpenCV provides computer vision algorithms and tools that enhance the performance of deep learning models. This approach has potential applications in healthcare, sports, and security. As technology advances, we can expect even better models for human activity recognition in the future.