Natural Language Processing – NLP in AI

FREE Online Courses: Your Passport to Excellence - Start Now

Natural Language Processing (NLP) is an artificial intelligence (AI) way of communicating with intelligent computers using natural language such as English.

Natural language processing is essential when you want an intelligent system, such as a robot, to follow your commands, when you want to hear a decision from a dialogue-based clinical expert system, and so on.

The topic of natural language processing (NLP) entails programming computers to accomplish useful activities using the natural languages that humans use. An NLP system’s input and output can be anything including Speech and Written Text.

What exactly is NLP?

Natural Language Processing (NLP) is a branch of computer science that combines human language and artificial intelligence. It is the technology that allows machines to comprehend, analyze, manipulate, and interpret human speech. It aids developers in organizing their information for tasks like translation, automatic summarization, Named Entity Recognition (NER), audio recognition, relationship extraction, and topic segmentation.

History of NLP

(1940-1960) – Machine Translation was the focus (MT)

Natural Language Processing (NLP) began in the 1940s.

1948– Birkbeck College in London introduced the first recognizable NLP application.

The 1950s – There was a disagreement between linguistics and computer science in the 1950s. Chomsky published his first work, Syntactic Structures, in which he contended that language is generative.

Chomsky also proposed Generative Grammar, which is a rule-based explanation of syntactic structures, in 1957.

Artificial Intelligence flavored (1960-1980)

The following were the major developments from 1960 to 1980:

Augmented Transition Networks (ATN)

A finite state machine capable of recognizing regular languages is Augmented Transition Networks.

Case Grammar

Linguist Charles J. Fillmore created Case Grammar in the year 1968. The preposition is used in case grammar to convey the relationship between nouns and verbs in languages such as English.

Case roles can be defined in Case Grammar to relate particular types of verbs and objects.

“Neha smashed the mirror with the hammer,” for example. Neha is an agent, the mirror is a theme, and the hammer is an instrument in this example case grammar.

From 1960 through 1980, the following systems were important:

SHRDLU

Terry Winograd created the SHRDLU program in 1968-70. It allows people to converse with moving items and the computer. It understands commands like “take up the green boll” and can also answer inquiries like “What’s inside the black box?” SHRDLU’s key contribution is that it demonstrates how syntax, semantics, and world reasoning may be integrated to create a system that understands natural language.

LUNAR

LUNAR is a well-known Natural Language database interface technology based on ATNs and Woods’ Procedural Semantics. It was able to convert complex natural language expressions into database queries and handled 78% of requests without errors.

1980 to the present

Natural language processing systems were reliant on complicated sets of handwritten rules until 1980. Machine learning algorithms for language processing were introduced by NLP after 1980.

NLP began to expand quicker and attain good process accuracy in the early 1990s, particularly in English Grammar. In 1990, an electronic text was also released, which served as a useful tool for teaching and testing natural language algorithms. Other variables could include the availability of computers with greater memory and faster CPUs. The Internet was a crucial element in the evolution of natural language processing.

Speech recognition, machine translation, and machine text reading are examples of modern NLP applications. When all of these applications are combined, artificial intelligence is able to learn about the world. Consider the case of AMAZON ALEXA, a robot that allows you to ask Alexa a question and receive a response.

Advantages of NLP

- Users can ask inquiries about any subject and receive a direct response in seconds thanks to natural language processing (NLP).

- NLP provides precise responses to questions, which means it does not provide extraneous or undesirable information.

- NLP enables computers to converse with people in their native tongues.

- It saves a lot of time.

- The majority of businesses utilize natural language processing (NLP) to improve the efficiency of documentation processes, document correctness, and find information in big databases.

Drawbacks of NLP

- It’s possible that natural language processing won’t show context.

- NLP is a fickle beast.

- It’s possible that NLP will necessitate more keystrokes.

- NLP is incapable of adapting to new domains and has a limited function, which is why it was designed for a single and specific task.

Components of NLP

The two components of NLP are as follows:

1. Natural Language Understanding (NLU)

Natural Language Understanding (NLU) extracts metadata from material such as concepts, entities, keywords, emotion, relations, and semantic roles to assist machines to understand and analyze human language.

NLU is mostly used in business applications to comprehend the problem of a customer in both spoken and written language.

The following are the tasks that NLU entails:

- It’s used to convert the given data into something useful.

- It’s utilized to look into many components of the language.

2. Natural Language Generation (NLG)

Natural Language Generation (NLG) is a translator that translates electronic data into natural language. Text planning, Sentence planning, and Text Realization are the essential components.

As this procedure entails:

- Text Planning

We must retrieve appropriate stuff from a knowledge base as part of this process.

- Sentence structure

To set the tone of the statement, we must choose the essential words.

- Text Representation

In a nutshell, it is the process of converting a sentence plan into a sentence structure.

The NLU, on the other hand, is more difficult than the NLG.

NLU’s Difficulties

a. Ambiguity in the lexicon

It’s predefined at the most basic level, such as at the word level.

b. Syntax Level ambiguity

In this approach, we can parse a sentence in a variety of ways.

c. Referential ambiguity

Referential ambiguity states that we must only use pronouns to refer to something.

Terminologies for Natural Language Processing

A. Phonology is a branch of phonology that deals with the study of sounds.

B. Morphology is the study of how things look. It is, in essence, the study of the formation of words from simple meaningful units.

C. Morpheme As a primordial unit of meaning in a language, we can say:

- Syntax

In this activity, we must arrange words to form a sentence. It also entails determining the structural significance of words. This is evident in both the sentence and the phrases.

- Semantics is the study of how words are defined. Furthermore, I how to put words together to form meaningful phrases and sentences.

- Pragmatics

It is concerned with the usage and comprehension of phrases in various settings. Also specifies how the sentence’s interpretation is influenced.

- The term “world knowledge” refers to general knowledge about the world.

NLP Phases

The five phases of NLP are as follows:

1. Lexical and Morphological Analysis

The Lexical Analysis is the initial step in NLP. The source code is scanned as a stream of characters and converted into intelligible lexemes in this phase. The entire book is divided into paragraphs, phrases, and words.

2. Syntactic Analysis (Parsing)

Syntactic analysis is used to check grammar and word layouts, as well as to show the relationships between words.

For instance, Agra travels to Poonam. The line Agra goes to the Poonam does not make sense in the real world, hence the Syntactic analyzer rejects it.

3. Semantic Evaluation

The representation of meaning is the focus of semantic analysis. The literal meaning of words, phrases, and sentences is the main focus.

4. Discourse Integration

Discourse Integration is influenced by the sentences that come before it, as well as the meaning of the ones that come after it.

5. Pragmatic Evaluation

The fifth and final phase of NLP is pragmatic. It uses a set of rules that characterize cooperative discussions to assist you in discovering the desired impact.

“Open the door,” for example, is read as a request rather than an order.

What is the Purpose of NLP?

We can execute activities like automated speech and automated text writing in less time with this. Furthermore, there are far too many NLP applications in these activities.

Consider the following scenario:

Summarization by Machine (to generate a summary of given text)

Automated Translation (translation of one language into another)

Natural Language Processing Methodology

A text is constructed of speech in the NLP process, and voice-to-text conversion is conducted.

Two processes are involved in this mechanism:

- Natural Language Understanding

- Natural Language Generation

a. Comprehension of Natural Language (NLU)

To learn the meaning of a text, we use natural language comprehension. We need to grasp the nature and structure of each word for NLU.

Lexical Ambiguity

Words have numerous meanings in this context.

Syntactic Ambiguity

Basically, the statement has numerous parse trees in this syntactic ambiguity.

Semantic Ambiguity

In general, a statement with several meanings is used in this context.

Anaphora Ambiguity

Basically, there are present in this phrase or term. That has been mentioned before, but it has a different meaning.

b. Natural Language Generation (NLG)

In a nutshell, it’s text generated automatically from structured data. That is, it is written in a comprehensible manner with significant phrases and sentences. The challenge of natural language creation, on the other hand, is difficult to solve. It is a subset of Natural Language Processing.

Three steps of natural language generation have been proposed:

i. Text Organization

In a nutshell, it’s the order of content in structure data.

ii. Sentence Preparation

In general, we must join words to express the flow of information based on structured data.

iii. Completion

Basically, we utilize a grammatically correct sentence to represent content.

NLP Applications

NLP can be used in the following ways. –

1. Answering Questions

Question Answering is concerned with developing systems that can automatically respond to human inquiries in natural language.

2. Spam Detection

Spam detection is a technique for detecting undesired e-mails before they reach a user’s inbox.

3. Sentiment Analysis

Opinion mining is another name for sentiment analysis. It’s a web-based tool for analyzing the sender’s attitude, behavior, and emotional condition. By assigning values to the text (positive, negative, or natural), and determining the mood of the context, this application is developed using a combination of NLP (Natural Language Processing) and statistics (happy, sad, angry, etc.)

4. Speech Recognition

The process of translating spoken words into writing is known as speech recognition. Mobile, home automation, video recovery, dictating to Microsoft Word, voice biometrics, voice user interface, and other applications use it.

5. Natural Language Understanding (NLU)

It translates a vast amount of text into more formal representations, such as first-order logic structures, so that computer programs may utilize natural language processing notations more easily.

6. Extraction of information

One of the most essential uses of NLP is information extraction. It is used to extract structured data from machine-readable documents that are unstructured or semi-structured.

7. Chatbot

One of the most essential uses of NLP is Chatbot implementation. Many businesses employ it to give customer chat services.

8. Spelling Correction

For spelling correction, Microsoft Corporation supplies word processing software such as MS-word and PowerPoint.

9. Automated Translation

Machine translation is a method of converting text or speech from one natural language to another. Google Translator, for example.

NLP tools and approaches

1. Statistical NLP, Machine Learning, And Deep Learning

The first NLP applications were hand-coded, rules-based systems that could do some NLP tasks but couldn’t easily expand to meet an ever-increasing stream of exceptions or the growing volumes of text and voice input.

Statistical natural language processing (NLP) uses computer algorithms with machine learning and deep learning models to automatically extract, classify, and label parts of text and speech input, and then assign a statistical likelihood to each probable meaning.

Today, deep learning models and learning techniques based on convolutional neural networks (CNNs) and recurrent neural networks (RNNs) enable NLP systems that ‘learn’ as they work and extract ever more accurate meaning from huge volumes of raw, unstructured, and unlabeled text and voice data sets.

2. Python and the Natural Language Toolkit (NLTK)

For tackling specialized NLP tasks, the Python programming language provides a large choice of tools and frameworks. Many of them can be found in the Natural Language Toolkit, or NLTK, which is an open-source collection of libraries, programs, and educational resources for developing NLP programs.

The NLTK offers libraries for many of the above-mentioned NLP tasks, as well as libraries for subtasks like sentence parsing, word segmentation, stemming and lemmatization (word-trimming methods), and tokenization (for breaking phrases, sentences, paragraphs, and passages into tokens that help the computer better understand the text). It also offers libraries for developing capabilities like semantic reasoning, which allows users to draw logical inferences based on information retrieved from the text.

NLP Systems Examples

Let’s look at an example that clarifies our understanding of Natural Language Processing:

a. Customer reviews, as they are the most crucial aspect in assisting businesses in discovering relevant information for their operations.

Additionally, it aids in increasing client satisfaction.

As more suggestions are received, the more relevant services become. It also aids in the comprehension of the customer’s requirements.

b. Virtual digital assistants (VDAs)

Artificial intelligence in the form of virtual digital assistants is currently the most well-known.

NLP APIs

Natural Language Processing APIs enable developers to connect human-to-machine communication and perform a variety of helpful functions, including speech recognition, chatbots, spelling correction, sentiment analysis, and more.

The following is a list of NLP APIs:

1. Natural Language API for Google Cloud

You can use the Google Cloud Natural Language API to extract useful information from unstructured text. In more than 700 specified categories, this API allows you to do entity recognition, sentiment analysis, content classification, and syntax analysis. It also enables text analysis in a variety of languages, including English, French, Chinese, and German.

Pricing: You must pay $1.00 per 1000 units per month after doing entity analysis for 5,000 to 10,000,000 units.

2. Cloud NLP API

Using natural language processing technology, the Cloud NLP API is utilized to expand the capabilities of the application. It enables you to perform sentiment analysis and language detection, among other natural language processing activities. It’s simple to use.

The Cloud NLP API is completely free to use.

3. AYLIEN’s Text Analysis API

The AYLIEN Text Analysis API is used to extract meaning and insights from the textual content. It is available for both free and paid subscriptions starting at $119 per month. It’s simple to use.

This API is available for 1,000 hits per day for free. You can also pay for a monthly subscription, which ranges from $199 to S1, 399.

4. SYSTRAN’s translation API

The text is translated from the source language to the target language using the SYSTRAN Translation API. Language detection, text segmentation, named entity recognition, tokenization, and a variety of other tasks are all possible with its NLP APIs.

This API is completely free to use. Commercial users, on the other hand, must utilize the premium version.

5. Sentiment Analysis API

Sentiment Analysis API is also known as ‘opinion mining,’ and it is used to determine a user’s tone (positive, negative, or neutral). Itis free for requests of fewer than 500 per month. Its paid edition costs somewhere between $19 and $99 a month.

6. Speech to Text API

To convert speech to text, the Speech to Text API is employed.

The speech-to-text API is free for the first 60 minutes of conversion per month. Its paid version costs anything between $500 and $1,500 per month.

7. Chatbot API

You may use the Chatbot API to build intelligent chatbots for any service. It recognizes Unicode characters, classifies text, and supports several languages, among other features. It’s incredibly simple to use. It aids in the development of a chatbot for your web apps.

Chatbot API is free for the first 150 requests per month. You can also pay for it, with monthly subscriptions ranging from $100 to $5,000.

8. Watson API by IBM

The IBM Watson API combines a variety of advanced machine learning algorithms to allow developers to categorize text into a variety of bespoke categories. It supports a variety of languages, including English, French, Spanish, German, and Chinese. You can extract insights from texts, automate workflows, improve search, and interpret sentiment with the IBM Watson API. The key benefit of this API is that it is really simple to use.

Pricing: For starters, it provides a free 30-day IBM cloud account trial. You can also choose from a variety of paid programs.

Implementation Aspects of Syntactic Analysis

For syntactic analysis, academics have created a lot of algorithms, but we only consider the following simple methods:

- Context-Free Grammar

- Top-Down Parser

Let’s take a closer look at them.

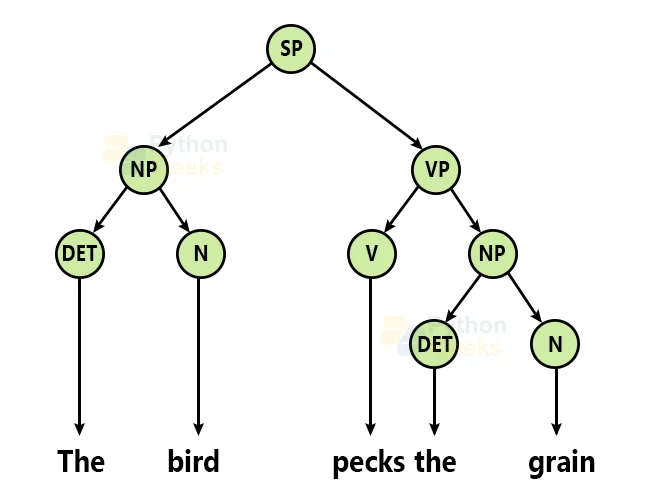

1. Context-Free Grammar

It is the grammar that consists rules with a single symbol on the left-hand side of the rewrite rules. Let us create grammar to parse a sentence −

“The bird pecks the grains”

Articles (DET) − a | an | the

Nouns − bird | birds | grain | grains

Noun Phrase (NP) − Article + Noun | Article + Adjective + Noun= DET N | DET ADJ N

Verbs − pecks | pecking | pecked

Verb Phrase (VP) − NP V | V NP

Adjectives (ADJ) − beautiful | small | chirping

The parse tree divides the language into structured sections, making it easier for the computer to interpret and process. A set of rewriting rules, which govern which tree structures are legal, must be constructed before the parsing algorithm may construct this parse tree.

These rules state that a succession of additional symbols can expand a symbol in the tree. If there are two strings, Noun Phrase (NP) and Verb Phrase (VP), then the string formed by NP followed by VP is a sentence, according to the first order logic rule. The following are the rewriting rules for the sentence:

S → NP VP

NP → DET N | DET ADJ N

VP → V NP

Lexicon −

DET → a | the

ADJ → beautiful | perching

N → bird | birds | grain | grains

V → peck | pecks | pecking

The parse tree can be created as shown −

Take a look at the rewrite rules listed above. Because V can be replaced with either “peck” or “pecks,” statements like “The bird pecks the grains” may be incorrectly allowed. i.e. the inaccuracy in subject-verb agreement is accepted as correct.

Merit −

The simplest and most generally used grammatical style.

Demerits −

a. They aren’t very accurate. “The grains peck the bird,” for example, is syntactically valid, but even if it makes no sense, parser accepts it as a correct phrase.

b. Multiple sets of grammar must be produced in order to achieve great precision. It may be necessary to create entirely new sets of rules for parsing singular and plural variations, passive sentences, and so on, which could result in an unmanageable number of rules.

2. Top-Down Parser

The parser begins with the S symbol and attempts to rewrite it into a sequence of terminal symbols that corresponds to the classes of the words in the input sentence until it is entirely made up of terminal symbols.

The input sentence is then compared to these to see if they match. If this is not the case, the procedure is restarted using a new set of rules. This is done until a specific rule that specifies the sentence’s structure is discovered.

Merit − It is straightforward to put into practice.

Demerits −

- It is inefficient, because if an error occurs, the search procedure must be repeated.

- Working at a snail’s pace

NLP Libraries

1. Scikit-learn is a Python library that includes a variety of algorithms for generating machine learning models.

2. NLTK (Natural Language Toolkit) is a comprehensive toolkit for all NLP approaches.

The pattern is a web mining module for natural language processing and machine learning.

TextBlob: It has an intuitive interface for learning basic NLP tasks such as sentiment analysis, noun phrase extraction, and pos-tagging.

Quepy: Quepy is a program that converts natural language questions into database queries.

SpaCy is an open-source natural language processing (NLP) package that can be used for data extraction, analysis, sentiment analysis, and text summarization.

Gensim: Gensim handles data streams and works with huge datasets.

Applications of Natural Language Processing

We can do the following with NLP:

- Blocks of text are summarized.

- Creating chatbots is a task that requires a lot of time and effort.

- Translation by a computer.

- Spam is being fought.

- Information extraction.

- Keyword tags are generated automatically.

- Identifying the different types of entities that were extracted.

- Sentiment analysis is used to determine the sentiment of a string.

- Reducing words to their simplest form.

- Summarizing.

- Question-answering.

- Customer service is really important.

- Analyze the market.

Natural Language Processing Importance

Natural Language Processing (NLP) is extremely important.

Consider the following two sentences to better appreciate the advantages of natural language programming:

“Every service level agreement should include cloud computing insurance,” says the author.

“Even in the cloud, a decent S.L.A guarantees a better night’s sleep.”

In general, if a person is familiar with natural language processing (NLP), they will recognize cloud computing software. In addition, cloud computing is referred to as a cloud.

These kinds of ambiguous elements emerge regularly in human language. Machine learning algorithms, on the other hand, have a poor track record when it comes to interpretation. Furthermore, deep learning and artificial intelligence are advancing at a rapid pace. Also, effectively interpret them.

So that was all there was to it when it came to AI and Natural Language Processing (Natural Language Processing in Artificial Language). I wish you all the best.

Conclusion

Natural language processing is critical to the advancement of technology and how people interact with it. Chatbots, cybersecurity, search engines, and big data analytics are just a few examples of real-world uses in both the commercial and consumer worlds. NLP is likely to continue to be a key element of industry and everyday life, despite its challenges.