Heuristic Search in AI

A heuristic search strategy is a type of artificial intelligence (AI) search that aims to identify a good, but necessarily perfect, the solution from a set of choices. This method produces decisions by ranking all of the options accessible at each branch of a search and then selecting the best option from the list. Heuristic searching, rather than focusing on finding an optimal solution like other search methods, is designed to be rapid, and so seeks the most acceptable choice within a given time limit or memory space.

A heuristic is a method for investigating search calculations. It evaluates the available data at each stretching step before deciding which branch to choose. It accomplishes this by strategically placing additional possibilities. Any device that is commonly successful but does not guarantee success in every case is referred to as a heuristic.

History

In the 1970s and 1980s, psychologists Daniel Kahneman and Amos Tversky pioneered the study of heuristics in human decision-making. However, Herbert A. Simon, a Nobel Laureate whose primary study focus was problem-solving, was the first to introduce this concept.

Why Are Heuristics So Important?

We require heuristics to get an answer that is sufficient for the problem in a reasonable amount of time. Heuristics can assist firms in making quick decisions in complex situations with limited resources and time by using shortcuts and estimated calculations. The majority of heuristic methods rely on mental shortcuts to make decisions based on previous experiences.

We were able to reduce this to a polynomial number using Heuristic Search. We use heuristic search in AI because it allows us to apply it in situations when we can’t find known calculations.

AI Heuristic Search Methodologies

In a nutshell, such heuristic strategies can be classified into two groups:

1. Direct Heuristic Search in AI

Blind Search, Uninformed Search, and Blind Control Strategy are all terms used to describe these types of searches. Because they need a lot of time or memory, these aren’t always possible.

They utilize an arbitrary sequence of operations to search the entire state space for a solution.

a. Greedy Best First Search in AI

The greedy best-first search algorithm always chooses the trail that appears to be the most appealing at the time. We expand the node that is nearest to the goal node in the best-first search algorithm, and so the closest cost is evaluated using a heuristic function.

This type of search consistently selects the path that appears to be the best at the time. It’s a cross between BFS and DFS. It employs heuristic limit and search techniques. The BFS allows us to combine the benefits of both estimations.

b. A*Search in AI

The most well-known type of best-first interest is a*search. It employs the heuristic limit h(n), as well as cost, to arrive at the center point n from the earliest beginning point state g(n). It has strong UCS features and an insatiable best-first request, allowing it to effectively deal with the problem.

Using the heuristic limit, an A* search process finds the shortest path through the chase space. This chase count expands fewer interest trees, resulting in a more ideal result.

A* count is similar to UCS except it utilises g(n)+h(n) instead of g(n) . It is formulated using weighted graphs, implying that it can locate the shortest path with the least distance and time cost.

As a result, AI’s A* algorithm is a well-informed best-first search algorithm.

2. Weak Heuristic Search in AI

Informed Search, Heuristic Search, and Heuristic Control Strategy are some other names for this. These are effective when used effectively on the relevant types of tasks, and they typically require domain-specific data.

This additional information is required to compute preference among child nodes to explore and extend. A heuristic function is connected with each node. Best First Search (BFS) and A* are two examples.

Before we go into detail about specific tactics, let’s have a look at some of the most common ones.

a. Uniform Cost Search

Essentially, it acts as a mastermind in increasing the cost of the path to a center point. It also consistently develops the lowest cost center point.

The basis node is formed via uniform-cost search, which grows nodes based on their path costs. It’s frequently used to solve any graph/tree when the lowest cost is desired.

Even though it is unclear from the Breadth-First chase if each advancement has a proportionate cost. It investigates cost-extensive cost-extensive cost-extensive cost-extensive cost-extensive cost-

b. Iterative Deepening Depth First Search

Iterative Deepening Depth First Search (IDDFS) is a strategy in which DFS cycles are repeated with increasing cutoff points until the target is found. IDDFS is as good as BFS, but it requires a lot less memory.

It visits the centers in the request tree in a similar solicitation as significance first chase at each accentuation, but the overall solicitation whereby center points are visited first is sufficient breadth-first.

c. Depth First Search in AI

It is based on the LIFO possibility. As far as Last In First Out is concerned. In the same way, the LIFO stack data structure was completed in recursion. Along these lines, it used to create a hazy course of action for centers from the Breadth-First approach, but only in the case of varying demand.

From the root to the leaf center point, the way is taken care of in each accentuation. As a result, shop centers are faced with pressing space requirements. The additional room is bm, with a factor of b and a significance of m.

Disadvantages of Depth First Search

- As the estimation may not come to an end and continue in an unimaginable manner. A response to this problem will now take on a cut-out significance.

- In the odd event, if the optimum cut-off is d, and the taken cut-out is smaller than d, this estimate may fail miserably.

- If d is smaller than the defined cut-off, then the execution time will increase.

- Its multidimensional nature is dependent on a variety of factors. It is unable to distinguish between duplicate center points.

d. Bidirectional Search in AI

As the name implies, this can be used in two ways. It works with two people looking at the same run at the same time, with one starting at the source and the other going backward from the goal to the source.

The data structure should be negotiated between the two inquiries. The most restrictive route between the source (beginning center) and the goal center point is determined using a guided outline.

The two missions will begin at different locations, and the estimation will end when the two requests meet in the middle. It is a faster strategy that reduces the amount of time spent examining the graph.

When the beginning center point and the target center are distinct and visible, this technique is effective. The two are equivalent in terms of spreading factors.

e. Breadth First Search in AI

BFS is a Heuristic Search technique for diagramming data or looking through a tree or intersection structures. In an accurate breadthwise structure, the estimation profitably visits and means all of the major centers in a graph.

This count selects a single center point (starting or source point) in a diagram and then visits all of the centers in the vicinity. Keep in mind that BFS visits each of these centers on its own.

When the calculation has visited and calculated the starting center point, it next moves on to the nearest unvisited center points and evaluates them. All center points are stepped after they’ve been visited. These accentuations will continue until all of the graph’s center points have been visited and verified in a viable manner.

Hill Climbing in AI

Hill Climbing is a type of heuristic search in the field of Artificial Intelligence for logical progression issues. It attempts to find a good enough response for the issue given a set of data sources and a better-than-average heuristic limit. This line of action might not be the best in the long run.

According to the above description, logical headway concerns imply that inclination climbing deals with situations where we need to expand or confine a specific actual boundary by selecting information sources.

For instance, in the Model Traveling Salesman issue, we must limit the division passed by the salesperson.

The term ‘heuristic search’ implies that this interest estimation may not yield the best solution to the problem. In any event, it will provide a decent game plan in a reasonable amount of time.

A heuristic limit is a limit that ranks all possible options at any increasing step in the search for figures subject to the information provided. It allows the estimator to choose the optimal course from a list of options.

Features of Hill Climbing

1. Produce and Test variation

The Hill Climbing strategy is a version of the Generate and Test approach. The Generate and Test technique generates data that can be used to help determine which bearing to move in the inquiry space.

2. Use of Greedy Approach

Calculate the amount of time it takes to climb a hill The search progresses down the path that lowers the cost.

3. No Backtracking

It does not retrace the chase space since it does not remember previous states.

Types of Hill Climbing in AI

1. Simple Hill Climbing

Simple Hill Climbing is the simplest method for performing a slope climbing computation. It simply evaluates all neighbor hub states at the same time and selects the one, which increases current expense and is set as the current state.

It just checks its one replacement state, and if it finds it to be superior to the current condition, it moves to another similar state.

This has the following features:

- Less time-consuming

- A less-than-ideal arrangement that isn’t guaranteed

2. Steepest Ascent Hill Climbing

The steepest-Ascent calculation combines several fundamental slope-climbing calculations. It looks at all of the nearby nodes first, then chooses the node that is closest to the answer state

as the next node.

This calculation examines all of the current state’s surrounding hubs and selects the one that is

closest to the objective state. It takes longer since it searches for different neighbors.

3. Stochastic Hill Climbing

Before moving, stochastic slope climbing does not consider all of its neighbors. As part of the search process, it employs randomness. It’s also an area search algorithm, which means it tweaks one solution and explores a small part of the search space until it finds the local optima.

This suggests that it should be employed on unimodal optimization issues or after a global optimization approach has been applied. This calculation randomly selects one neighbor hub and determines whether to use it as the current state or to investigate another state.

Let’s have a look at the simple Hill-Climbing algorithm.

1. If the first state is not the desired one, stop and try again. Make the initial state current if it isn’t already.

2. Loop until the solution is found or there are no more new operators to apply to the current state:

A. Choose a new operator to apply to the current state of production.

B. Assess the new situation:

Stop and return success if you’re in a goal state.

If it’s better than the present condition, go ahead and make it the current state.

Even if not better than the current state, continue until the solution is reached.

C. Exit.

Hill Climbing’s Consequence

1. Local Maximum

All of the states around it have values that are lower than the current one. The Greedy Approach means that we will not be shifting to a lower state as a result of its implementation. Even though a better arrangement may have existed, the procedure is now complete. We utilize backtracking as a workaround.

2. Plateau

Its neighbors are all worth the same. This makes choosing a course challenging. To avoid this, we make a haphazardly large jump.

3. Ridge

Every conceivable course of progress is descending at an edge. This gives it the appearance of a pinnacle and brings the procedure to a close. To avoid this, we can use at least two guidelines before testing.

Constraint Satisfaction Problems

A constraint is nothing more than a restriction or a limitation. To address problems with AI, we may need to adhere to specific limits. Let’s see if we can solve a problem this way.

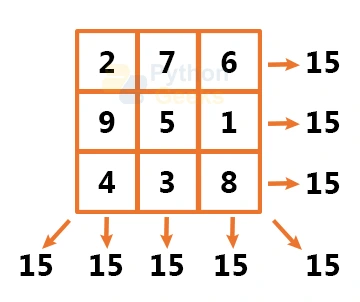

Let’s talk about a magic square for a moment. This is a grid of numbers, usually integers, that are organized in a square pattern. The sum of the integers in each row, column, and diagonal equals a constant we call the Magic Constant. Let’s put this into practice with Python.

def magic_square(matrix):

size=len(matrix[0])

sum_list=[]

for col in range(size): #Vertical sum

sum_list.append(sum(row[col] for row in matrix))

sum_list.extend([sum(lines) for lines in matrix])#Horizontal sum

result1=0

for i in range(0,size):

result1+=matrix[i][i]

sum_list.append(result1)

result2=0

for i in range(size-1,-1,-1):

result2+=matrix[i][i]

sum_list.append(result2)

if len(set(sum_list))>1:

return False

return True

Now let’s run this code

>>> magic_square([[1,2,3],[4,5,6],[7,8,9]])

False

This isn’t a magic square, by the way. The rows/columns/diagonals do not add up to the same value. Let’s attempt a different set of lists this time.

>>> magic_square([[2,7,6],[9,5,1],[4,3,8]])

True

Surely, this is a magic square, because all of the values sum up to the constant 15 in all directions!

Simulated Annealing Heuristic Search in AI

It is an algorithm that never moves towards lower esteem that is likely to be incomplete, causing it to stall on a close extreme.

Also, if the calculation uses an irregular walk, such as relocating a replacement, it may finish but not be proficient.

Mimicked Annealing is a method of calculating proficiency and culmination.

In terms of metallurgy, annealing is a process that involves solidifying a metal or glass at a high temperature and then gradually cooling it to a low-vitality crystalline condition.

In reenacted toughening, a similar approach is used, where the computation chooses an arbitrary move rather than the ideal step.

When erratic movement improves the situation, it proceeds in the same manner.

Something other is that the calculation either follows the path with a probability of less than 1 or it moves downhill and chooses a different path.

Best-First Search (BFS) Heuristic Search in AI

BFS is a well-informed search that uses an evaluation function to determine which neighboring is the most promising before moving on to the next step. Without considering a cost function, breadth- and depth-first searches blindly explore pathways.

BFS, on the other hand, isn’t the same. To store node costs, we utilize a priority queue. Let’s look at the pseudocode for BFS Heuristic Search.

1: List OPEN is defined with a single node s– the start node.

2: Return failure if the list is empty.

3: Remove node n (the highest-scoring node) from the list and move it to the CLOSED list.

4: Node n should be expanded.

5: Return success and trace path from goal node to s to return the solution if any successor to n is the goal node.

6: Use the f(n) evaluation function. If the node isn’t already in one of the lists, add it to the OPEN list.

7: Return to step 2.

All of this was covered in AI Heuristic Search Techniques. I hope you found our explanation to be helpful.

Instances of Heuristics in Daily Life

Some real-life instances of heuristics that people employ to solve problems include:

1. Common Sense

Common sense is a heuristic that is used to solve an issue based on an individual’s observations.

2. Rule Of Thumb

The term “rule of thumb” is also used in heuristics. This heuristic enables a person to make an approximation without conducting a thorough search.

3. Working Backwards

Working backward allows a person to solve an issue by believing the problem has already been solved and working backward in their imaginations to discover how far a solution has been reached.

4. Familiarity Heuristic

It helps a person to tackle a problem based on the notion that they are familiar with the circumstance, so they should respond in the same way they did previously.

5. Educated Guess

It enables a person to conclude without conducting a thorough investigation. When someone uses it, they think about what they’ve seen in the past and apply that knowledge to a circumstance where no definitive answer has yet been determined.

6. Availability Heuristic

The availability heuristic allows a person to assess a situation based on previous experiences with similar situations.

Types of heuristics

The availability heuristic, affect heuristic, and the representative heuristic is all examples of heuristics. Each sort of heuristic has a function to play in decision-making. The availability heuristic, the Affect heuristic, and the Representative heuristic will be discussed.

1. Representative heuristic

It happens when we compare the chance of one event to the probability of another.

The representational heuristic can be understood using the example of product packaging, as buyers prefer to identify a product’s quality with its outward package. If a company’s products are packaged in a way that reminds you of a high-quality, well-known product, buyers will associate that product with the same level of quality as the branded product.

As a result, rather than evaluating a product based on its quality, shoppers compare products based on package resemblance.

2. Affect heuristic

It is based on the negative and positive emotions associated with specific stimuli. It contains impulsive feelings based on previous views. Its hypothesis states that one’s emotional response to a stimulus might influence one’s decisions.

When people take the time to closely assess a situation, they may make decisions based on their emotional reactions.

3. Availability heuristic

The availability heuristic is believed to be the judgment that people make regarding the possibility of an event based on information that immediately comes into mind. People often base their decisions on prior knowledge or experience of an event. It enables a person to assess a situation based on previous experiences with similar situations.

Heuristics’ Limitations

The heuristic has certain drawbacks in addition to its advantages.

- Heuristics can help us solve problems and speed up our decision-making process, but they can also bring errors. Just because something worked correctly in the past does not indicate it will work again.

- If we continually rely on existing solutions or heuristics, it will be difficult to come up with new ideas or solutions.

Conclusion

As a result, we addressed Heuristic Search in AI in this Python AI tutorial. While we mentioned a few strategies that fit into this category, we also briefly explored BFS, Hill Climbing, Simulated Annealing, and CSP.

We also used Python to implement CSP. Still, feel free to ask any questions about Heuristic Search Techniques in the comment section.